Would you use AI to hunt mushrooms?

How to improve the use of technology in high-risk choices.

The Intelligent Friend - The newsletter about the AI-humans relationships, based only on scientific papers.

Intro

Imagine that you have finally embarked on the outing in the woods or on a trip you have been wanting to take for a long time. You put on your comfortable shoes, packed your rucksack, and started, enthusiastic, on your personal adventure. At some point you notice a mushroom on a tree. You are intrigued: it could be your prize and testimony to the natural journey. But then a doubt occurs to you. The mushroom could be poisonous. So you look for technology that can help you. For instance, an AI-driven app that can recognize the edibility of mushrooms. But how trustworthy would you actually be? Would you use it? What would you rely on in your exploration?

The paper in a nutshell

Title: Effects of Explainable Artificial Intelligence on trust and human behavior in a high-risk decision task. Authors: Leichtmann et al. Year: 2023. Journal: Computers in Human Behavior.

Main result: in a mushroom hunt, which could be described as a high-risk task, explaining the AI's mushroom identification predictions significantly improved people's performance in the picking task. There is thus a significant effect of the explanation of AI functioning on the task performed with the help of technology.

The authors, starting with the concept of AI literacy and Explainable AI (XAI), tried to understand how the educational intervention about how the AI works and visual explanations influence consumer cognition and behavior, specifically in terms of trust and performance.

High-risk tasks and AI

Let’s go back to our adventure and mushroom hunting. Have you thought about what your answer might be? Would you feel more or less safe with the support of your AI-driven app? The scenario I have described to you is a high-risk task: if you get the performance wrong, the result could be harmful if not fatal in some cases. And there are many occasions when AI provides support in such tasks. Think, for instance, of medical image analysis1. Misclassifications in a diagnosis can lead to problems in treatment as well as over- or under-estimation of diseases.

So? How can the relationship of humans with these technologies be improved to ensure that there is not too much or too little reliance, and that we are aware of how we arrive at certain results? Today's authors explore and broaden the perspectives of a possible answer to this question. First of all, people must build trust that, at the same time, must be calibrated on the basis of their knowledge of the technology in front of them. Naturally, trust develops over time as users learn about a system's functionality and limitations. Ideally, this knowledge leads to a perfect alignment between trust and the system's actual performance2.

How, then, do we achieve this and improve people's performance in such high-risk tasks? In particular, there are two main methodologies through which users can be made more aware of the choice they are making by knowing how AI works: explainable AI (XAI)3 and improving the AI literacy of humans4.

Improving the AI literacy

The first great path that can be taken is to improve education and people's knowledge regarding technology. Think of it exactly this way: you don't know something, so you educate yourself and learn about it. Don't know how to prepare a dessert? Learn about it. Don't know the statistics? Try to understand the foundations of it.

Of course it is a simplification, but this generalization is valid because, in reality, it is not so easy to define AI literacy, which broadly includes different elements such as the knowledge and understanding of AI itself (defined as knowing the basic functions of TO THE). Today's authors summarized by writing that "the general ability of users to comprehend AI technology and the ability to use it are often labeled AI literacy".

I really like this way of summarizing, so we'll use it from now on. Also because, as you may have guessed, "literacy" - which we have now understood to be a knowledge and understanding of what we are dealing with - is not something strictly related only to AI. You will have heard, for example, "digital literacy"5, or "ICT literacy"6. Now, on the topic the authors provide important specifications on the subject of cognitive processes and understanding abilities, which naturally we do not report here.

One thing I want to reiterate (as mentioned by the authors) is that understanding new technologies is key to building trust and using them safely7.

For instance, a study8 showed that even a basic introduction to an automated vehicle before driving could significantly impact user trust and behavior. Participants who received some information about the system had lower collision rates in a driving simulator compared to those who didn't.

What is Explainable AI?

In addition to improving people's knowledge and understanding of AI, another dynamic that can provide insight into the AI system used for a given (especially high-risk) task is to let the AI do it for you. In essence, AI becomes "explainable" (XAI).

Although not all attempts, especially due to technical difficulties, have produced exciting results regarding the ability to adequately illustrate their technological functioning, a large body of literature has attempted to classify the various techniques into three large groups which are very interesting and also useful for knowledge. personal: feature-based, attribution-based, example-based.

Feature-based: this type analyzes the internal features (called "features") that the neural network learned during training to explain its decisions. Visualizations show that early layers learn low-level concepts, while later layers learn high-level ones910;

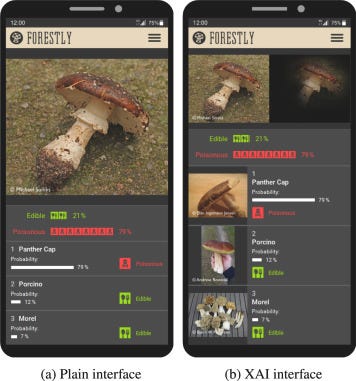

Attribution-based: as summarized by the author, this type, commonly used in image classification, “…explain(s) which image regions were important for a model's decision. These regions are visualized directly on the image”. The intervention done for the study participants falls into this category;

Example-based: this group of techniques specific data items as examples and shows them to users. Examples can be prototypes (typical representatives of particular classes) or counter-examples that differ from prototypes.

Calibrate your trust

So, we have seen that there are essentially these two broad possibilities for acting to improve people's understanding of an AI system. But what did the authors try to investigate? The authors decided to carry out an exploratory experiment (in short, they did not formulate the hypotheses first), to understand how an intervention on people's AI literacy and on explainable AI had an effect on trust (as well as on behavior) of users.

Now, a specification must be made about trust. Of course, you will have guessed for yourself that this psychological construct is a fundamental factor in the interactions between man and machine11. It can be defined as an ‘attitudinal construct that describes the willingness of users to be vulnerable to the actions of some automated system to achieve some goal’.12

However - and this is one of the most interesting things in the paper - the authors specify that a high level of trust cannot in itself be the objective to be achieved. You're probably wondering why, right? Well, as usual, let's take an example of a very simple practical case.

Imagine that you are using a chatbot assistant that advises you on how to prepare the famous dessert we talked about before, for example, the Mother's Day cake. Now, imagine that you have prepared the ingredients, baked them and also added the final embellishments.

Then, out of curiosity or by chance, you read that that AI assistant is not recommended for any task that does not involve, for example, writing marketing texts, because it has not been well trained. Now, your confidence level will go down. But it will lower for that particular task. If, however, perhaps you deal with marketing, you could use it for your agency or company where you work.

What have you done? You changed your confidence depending on the knowledge you had of the instrument. That is, you have calibrated it. You have reached an "appropriate level of trust that also corresponds with the competencies of the system should be achieved"13.

Forestly and results

The authors conducted an online study focusing on mushroom identification and picking, where participants were assigned the tasks of classifying mushroom pictures as either edible or poisonous, and making decisions on whether to pick the mushroom for cooking or leave it. To assist in their decision-making process, participants were provided with a mock-up of an app called Forestly, which integrated AI classification results.

To verify the impacts of the different interventions, the authors subjected the participants to various combinations. To test the effect of the educational intervention (AI literacy), one group received an introduction to machine learning. Regarding the XAI, however, while some respondents only saw the result given by the app, the other also saw the visual explanations of the result given by the app.

The initial educational intervention about AI functionality didn't significantly impact trust calibration. This might be due to the brevity of the explanation compared to more in-depth approaches used in other studies.

However, visual explanations of the AI's predictions did significantly improve performance in the mushroom-picking task. People were better at identifying edible mushrooms and avoiding poisonous ones with the explanations.

Interestingly, the effect was stronger for simply assessing edibility compared to the actual decision to pick a mushroom. This difference might be because picking might involve personality traits like risk aversion, making it a more complex decision.

Before moving on to the research questions, I remind you that you can subscribe to Nucleus, the exclusive weekly section in which I send 4 paper summaries, links to resources and interesting readings, and interview the authors. It comes out every Wednesday.

In this (open to everyone), for example, we talked about the political opinions of ChatGPT, a new environment for human-robot interactions and interesting links such as the similarity between “the child you” and the “current you”.

Just this? No: you can also access the Society, the exclusive chat where you can discuss AI and talk about your projects, as well as find your next co-founder or collaborator. Subscribe now for only 5 USD per month.

Take-aways

AI literacy and Explainable AI. To improve people's relationship with AI systems for various tasks, one can act fundamentally by improving their knowledge and understanding of AI (AI literacy) or by letting the AI explain its functioning (Explainable AI or XAI);

Not the ‘simple’ trust. Scholars have tried to understand how different types of interventions based on these two dynamics could influence the calibrated trust of consumers. In high-risk tasks, in fact, it is important to change the level of trust depending on the knowledge of the reliability and capabilities of the instrument in front of you;

XAI is effective. While the initial educational intervention about AI functionality didn't significantly impact trust calibration, visual explanations of the AI's predictions did significantly improve performance in the mushroom-picking task.

Further research directions

Grant users greater freedom in utilizing the Forestly app and incorporate qualitative methods such as user observation or log file analyses to analyze how users' varying levels of technological proficiency may influence their usage patterns of the app.

The dynamics of trust and behavioral change warrant investigation over the long term to assess the resilience and boundaries of these effects.

Forthcoming studies should examine different levels of expertise to determine when domain-specific knowledge plays a significant role in influencing outcomes.

Thank you for reading this issue of The Intelligent Friend and/or for subscribing. The relationships between humans and AI are a crucial topic and I am glad to be able to talk about it having you as a reader.

Has a friend of yours sent you this newsletter or are you not subscribed yet? You can subscribe here.

Surprise someone who deserves a gift or who you think would be interested in this newsletter. Share this post to your friend or colleague.

P.S. If you haven't already done so, in this questionnaire you can tell me a little about yourself and the wonderful things you do!

Jiao, Z., Choi, J. W., Halsey, K., Tran, T. M. L., Hsieh, B., Wang, D., ... & Bai, H. X. (2021). Prognostication of patients with COVID-19 using artificial intelligence based on chest x-rays and clinical data: a retrospective study. The Lancet Digital Health, 3(5), e286-e294.

Kraus, J., Scholz, D., Stiegemeier, D., & Baumann, M. (2020). The more you know: trust dynamics and calibration in highly automated driving and the effects of take-overs, system malfunction, and system transparency. Human factors, 62(5), 718-736.

Ng, D. T. K., Leung, J. K. L., Chu, K. W. S., & Qiao, M. S. (2021). AI literacy: Definition, teaching, evaluation and ethical issues. Proceedings of the Association for Information Science and Technology, 58(1), 504-509.

Long, D., & Magerko, B. (2020, April). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1-16).

Covello, S., & Lei, J. (2010). A review of digital literacy assessment instruments. Syracuse University, 1, 31.

Moehring, A., Schroeders, U., Leichtmann, B., & Wilhelm, O. (2016). Ecological momentary assessment of digital literacy: Influence of fluid and crystallized intelligence, domain-specific knowledge, and computer usage. Intelligence, 59, 170-180.

Sauer, J., Chavaillaz, A., & Wastell, D. (2016). Experience of automation failures in training: effects on trust, automation bias, complacency and performance. Ergonomics, 59(6), 767-780.

Körber, M., Baseler, E., & Bengler, K. (2018). Introduction matters: Manipulating trust in automation and reliance in automated driving. Applied ergonomics, 66, 18-31.

Olah, C., Mordvintsev, A., & Schubert, L. (2017). Feature visualization. Distill, 2(11), e7.

Zeiler, M. D., & Fergus, R. (2014). Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 13 (pp. 818-833). Springer International Publishing.

Hoff, K. A., & Bashir, M. (2015). Trust in automation: Integrating empirical evidence on factors that influence trust. Human factors, 57(3), 407-434.

Hannibal, G., Weiss, A., & Charisi, V. (2021, August). " The robot may not notice my discomfort"–Examining the Experience of Vulnerability for Trust in Human-Robot Interaction. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 704-711). IEEE.

Kraus, J., Scholz, D., Stiegemeier, D., & Baumann, M. (2020). The more you know: trust dynamics and calibration in highly automated driving and the effects of take-overs, system malfunction, and system transparency. Human factors, 62(5), 718-736.

If I liked mushrooms, sure.

Fascinating read and very important. AI for foraging seems like a natural extension of the tech but the risks from current foundational models is no joke