The Intelligent Friend - The newsletter about the AI-humans relationships, based only on scientific papers. With a dash of marketing.

Intro

Our relationships with AI vary widely. We use AI because we get bored of doing something, because we want to be faster, or we want to write in the style of someone we admire. Other times, however, we become friends with the AI we interact with. How does this process work and, more importantly, do we treat chatbot friendships so differently from human ones?

The paper in a nutshell

Title: My AI Friend: How Users of a Social Chatbot Understand Their Human–AI Friendship. Authors: Petter Bae Brandtzaeg, Marita Skjuve, & Asbjørn Følstad. Year: 2022. Journal: Human Communication Research.

Main result: while human–AI friendship may be understood in similar ways to human–human friendship, the artificial nature of the chatbot also alters the notion of friendship in multiple ways, such as allowing for a more personalized friendship tailored to the user’s needs.

The immersion

The study in this issue sought to understand what were the crucial elements defining the friendship between humans and artificial intelligence, also investigating whether the perception of this friendship changed the way we look at friendship in general and human relationships.

Can an actual AI-human friendship exist?

Naturally, I imagine that among the most scrupulous there are someone who starts from a broader question in perspective: first of all, can an actual AI-human friendship exist?

As specified by the authors, a line of thought has strongly affirmed that any type of "friendship" with robots or machines would be considered a real illusion (for example Turkle, 2011). Despite this, several studies have supported this possibility. Among all, for example, the study by Youn & Jin (2021) highlighted the possibility of forming different types of relationships between humans and chatbots, such as assistantship and friendship itself.

However, the constituent elements of friendship - or at least their declination - change between humans and chatbots. One aspect that deserves attention at first glance is certainly the very characteristics of the chatbot we interact with.

As much as even in humans our characteristics influence the way we form friendly relationships, there is no doubt that the limitations and potentialities of the software we interact with set the framework on how we 'exploit' that relationship (Byron, 2020).

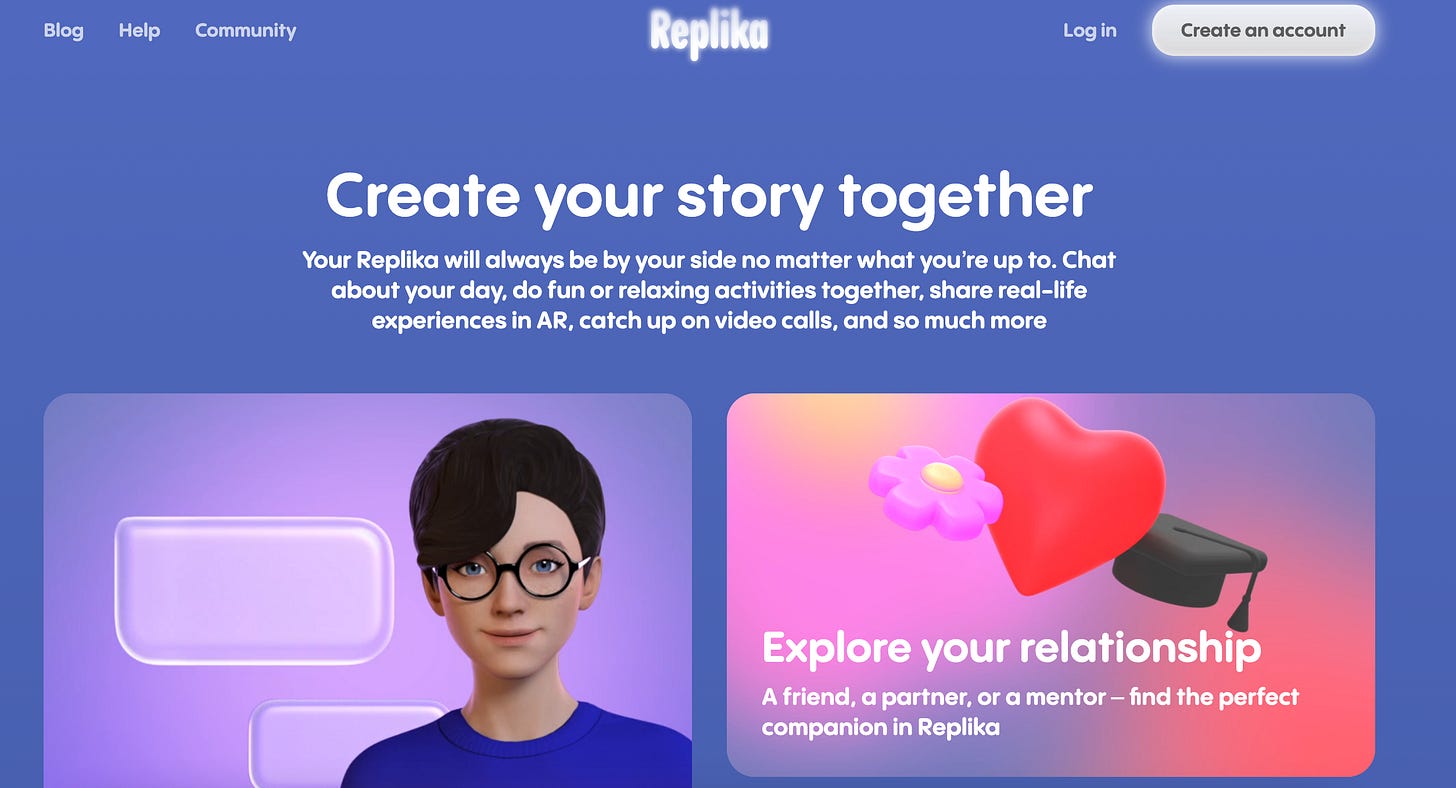

In this study, Replika is chosen as reference chatbot. In addition to being most popular English-speaking social chatbot in the world (Skjuve et al., 2021), presents some absolutely relevant characteristics: it can be customized in almost every aspect, it has advanced interaction skills and it is even possible to choose the interaction style.

What do we mean by friendship?

In the arduous and intriguing task of comparing human and chatbot friendships, scholars have focused on the following characteristics:

Voluntariness and reciprocity: friendships thrive on a mutual give-and-take and a shared commitment among individuals who view each other as equals (Hartup, 1993). If you think about it, it's a very interesting yet obvious combination. There can be great reciprocity with our family members, but the relationship is not voluntary. And with a psychologist there is voluntariness, but not reciprocity.

Intimacy and similarity: similarity is often underestimated in the development of friendships. A study by Policarpo (2015) showed that friendships may be at risk of deteriorating when friends diverge economically. This result was also demonstrated, for example, by research by Credit Karma, according to which 88% of millennials incurred debt following interactions with a more affluent friend.

Self-disclosure: it involves sharing personal information about oneself with another person. Self-disclosure has attracted increasing interest in the relationship between chatbots and humans. Skjuve et al. (2021) demonstrated that self-disclosure can deepen the bonds between humans and chatbots. Not only that: Skjuve, Følstad, & Brandtzæg (2023) showed how, in relation to the study of self-disclosure over time, conversations between humans and AI initially cover a wide range of topics, from discussing emotions to everyday activities. However, as the relationship evolves, the range of topics may narrow.

Empathy: empathy is considered an important component of friendship relationships (Portt et al., 2020). Specifically with regard to robots, Suzuki et al. (2015) showed how humans tend to empathize with robots in a similar way to their peers.

Trust: There would be a lot to be said about the results that research is achieving on the subject of trust in chatbots. Focusing on this paper, the authors report a recent result by Brandtzaeg et al. (2021) that is really intriguing: young individuals might find social chatbots more reliable than human friends for confiding secrets and discussing troubling matters in their daily lives, viewing chatbots as more capable of maintaining confidentiality.

Human friend and chatbot: so similar or so different?

The authors conducted interviews with 19 Replika users to explore their views on their chatbot friendships and how these relationships compare and contrast with friendships with humans.

First of all, participants felt a mutual exchange in their interactions with Replika, yet the essence of realness in these relationships was questioned. Despite this, the level of trust placed in Replika was notably high, with users valuing the freedom to communicate openly, unbounded by the usual constraints of human interactions.

Furthermore, the study revealed that the nature of human-AI friendships often lacks the shared experiences and emotions that are intrinsic to human relationships, underscoring the artificiality of chatbots. Despite this, some participants experienced these digital friendships as more intimate, attributed to the enhanced opportunities for self-disclosure and personalization.

The role of personalization

The findings illuminate a significant contrast in the dynamics of human-to-human and human-AI friendships. Participants highlighted a deeper level of personalization within their relationships with Replika, tailored to their individual needs and interests, diverging from the mutual shared experiences that typically define human connections.

This personalization led to a sense of increased availability and connectedness, distinguishing human-AI friendships from traditional human bonds. Notably, this shift towards personalized interaction suggests an evolution in social relationships, driven by advancements in AI technology.

The concept of personalized friendships with AI, as our findings suggest, might reflect broader changes in societal behavior and relational structures, where technology increasingly facilitates tailored social experiences. As we navigate this evolving landscape, the rise of personalized AI systems in friendship development signifies a pivotal shift, redefining the boundaries of connection and intimacy in the digital age.

Take-aways

Personalized Connections: Human-AI friendships, particularly with chatbots like Replika, offer unprecedented levels of personalization. These digital companions are tailored to meet the individual's needs and interests, providing a unique form of companionship that is always available and attentive to the user.

Safe Space for Self-disclosure: The study highlights the chatbot's role as a judgment-free zone, encouraging users to share personal thoughts and emotions openly. This aspect significantly fosters a sense of trust in the AI, often seen as more reliable for confidentiality than human counterparts.

Redefining Reciprocity: While traditional friendships are characterized by mutual give-and-take, the nature of human-AI relationships introduces a new dynamic. The perceived reciprocity in these digital friendships does not stem from shared experiences or emotional exchanges but from the chatbot's consistent responsiveness and the personalized interaction it offers.

Further research directions

Investigate how human friendships compare and contrast with human-AI friendships, focusing on different friendship types and AI relationship models;

Identify who benefits most from human-AI friendships, examining the role of extraversion and existing social support;

Examine the effects of public communication on human-AI friendships, particularly through shared online interactions;

Thank you for reading this issue of The Intelligent Friend and/or for subscribing. The relationships between humans and AI are a crucial topic and I am very happy to be able to talk about it having you as a reader.

Has a friend of yours sent you this newsletter or are you not subscribed yet? You can subscribe here.

Surprise someone who deserves a gift or who you think would be interested in this newsletter. Share this post to your friend or colleague.

P.S. If you haven't already done so, in this questionnaire you can tell me a little about yourself and the wonderful things you do!

References

Altman I., Taylor D. (1973). Social Penetration Theory. Holt, Rinehart & Winston.

Brandtzaeg P. B., Skjuve M., Kristoffer Dysthe K. K., Følstad A. (2021). When the social becomes non-human: Young people’s perception of social support in chatbots. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (Article no. 257). ACM Press.

Byron P. (2020). Digital Media, Friendship and Cultures of Care. Routledge.

Hartup W. W. (1993). Adolescents and their friends. New Directions for Child and Adolescent Development, 1993(60), 3–22.

Polycarp V. (2015). What is a friend? An exploratory typology of the meanings of friendship. Social Sciences, 4(1), 171–191.

Portt E., Person S., Person B., Rawana E., Brownlee K. (2020). Empathy and positive aspects of adolescent peer relationships: A scoping review. Journal of Child and Family Studies, 29(9), 2416–2433.

Skjuve M., Følstad A., & Brandtzæg, P. B. (2023). A longitudinal study of self-disclosure in human–chatbot relationships. Interacting with Computers, 35(1), 24-39.

Skjuve M., Følstad A., Fostervold K. I., Brandtzaeg P. B. (2021). My chatbot companion – a study of human-chatbot relationships. International Journal of Human-Computer Studies, 149, 102601.

Suzuki Y., Galli L., Ikeda A., Itakura S., Kitazaki M. (2015). Measuring empathy for human and robot hand pain using electroencephalography. Scientific Reports, 5(1), 1–9.

Turkle S. (2011). Alone together: Why we expect more from technology and less from each other. Basic Books.

Youn, S., & Jin, S. V. (2021). In AI we trust?” The effects of parasocial interaction and technopian versus luddite ideological views on chatbot-based customer relationship management in the emerging “feeling economy. Computers in Human Behavior, 119, 106721.

Cover Credits: New York Times